Hadoop Study

常用命令

hdfs dfsadmin -reporthdfs dfs -expungehue

sudo docker run \

-d --name hue \

--privileged=true \

-p 8888:8888 \

-h hue-docker-1 \

-v /home/container/hue-docker-1:/home/hue-docker-1-remote \

gethue/hue:latestdocker exec -ti --user root hue bashcd /usr/share/hue/build/env/bin/#设置db

./hue syncdb

#初始化数据

./hue migrateHadoop运行环境搭建

报错

在进行xsync集群分发,已经安装rsync的情况下,执行xsync /bin出现指令找不到的错误

解决办法: 将自己编写的/bin文件拷贝到系统目录的bin下

but there is no HDFS_NAMENODE_USER defined. Aborting operation

解决办法:

第一步:

vim /etc/profile.d/my_env.sh添加以下内容

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=rootsource /etc/profile分发到集群

xsync /etc/profile.d/hadoop集群全部都启动了,但是还是访问不了网页的解决方法

解决思路:去检查下防火墙情况,发现开了

systemctl status firewalldsystemctl stop firewalldsystemctl disable firewalld.serviceHDFS

HDFS的API案例实操

package edu.wzq;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Arrays;

public class HdfsClient {

/*

测试连接

*/

@Test

public void testMkdirs() throws InterruptedException, IOException, URISyntaxException {

// 1 获取文件系统

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), new Configuration(), "root");

// 2 创建目录

fs.mkdirs(new Path("/xiyou/huaguoshan/"));

// 3 关闭资源

fs.close();

}

/*

HDFS文件上传(测试参数优先级)

*/

@Test

public void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration configuration = new Configuration();

configuration.set("dfs.replication", "2");

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "root ");

// 2 上传文件

fs.copyFromLocalFile(new Path("D:/Hadoop/resources/sunwukong.txt"), new Path("/xiyou/huaguoshan/sunwukong.txt"));

// 3 关闭资源

fs.close();

}

/*

HDFS文件下载

*/

@Test

public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "atguigu");

// 2 执行下载操作

// boolean delSrc 指是否将原文件删除

// Path src 指要下载的文件路径

// Path dst 指将文件下载到的路径

// boolean useRawLocalFileSystem 是否开启文件校验

fs.copyToLocalFile(false, new Path("/xiyou/huaguoshan"), new Path("D:/Hadoop/resources/down_sunwukong.txt"), true);

// 3 关闭资源

fs.close();

}

/*

HDFS文件更名和移动

*/

@Test

public void testRename() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "root");

// 2 修改文件名称

fs.rename(new Path("/xiyou/huaguoshan/sunwukong.txt"), new Path("/xiyou/huaguoshan/meihouwang.txt"));

// 3 关闭资源

fs.close();

}

/*

HDFS删除文件和目录

*/

@Test

public void testDelete() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "root");

// 2 执行删除

fs.delete(new Path("/xiyou"), true);

// 3 关闭资源

fs.close();

}

/*

HDFS文件详情查看

*/

@Test

public void testListFiles() throws IOException, InterruptedException, URISyntaxException {

// 1获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "root");

// 2 获取文件详情

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

while (listFiles.hasNext()) {

LocatedFileStatus fileStatus = listFiles.next();

System.out.println("========" + fileStatus.getPath() + "=========");

System.out.println(fileStatus.getPermission());

System.out.println(fileStatus.getOwner());

System.out.println(fileStatus.getGroup());

System.out.println(fileStatus.getLen());

System.out.println(fileStatus.getModificationTime());

System.out.println(fileStatus.getReplication());

System.out.println(fileStatus.getBlockSize());

System.out.println(fileStatus.getPath().getName());

// 获取块信息

BlockLocation[] blockLocations = fileStatus.getBlockLocations();

System.out.println(Arrays.toString(blockLocations));

}

// 3 关闭资源

fs.close();

}

/*

HDFS文件和文件夹判断

*/

@Test

public void testListStatus() throws IOException, InterruptedException, URISyntaxException {

// 1 获取文件配置信息

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop100:8020"), configuration, "root");

// 2 判断是文件还是文件夹

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for (FileStatus fileStatus : listStatus) {

// 如果是文件

if (fileStatus.isFile()) {

System.out.println("f:" + fileStatus.getPath().getName());

} else {

System.out.println("d:" + fileStatus.getPath().getName());

}

}

// 3 关闭资源

fs.close();

}

}报错

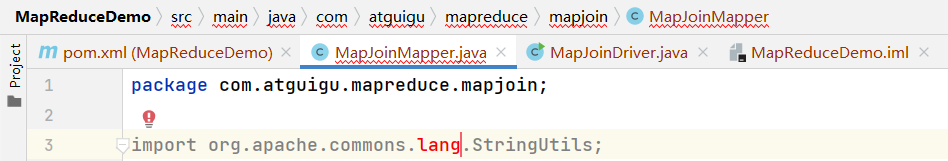

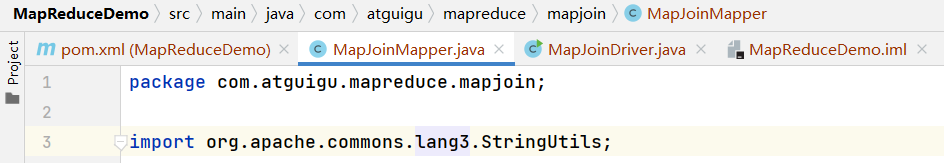

import org.apache.commons.lang.StringUtils;的时候出错

解决办法: 在lang后添加版本号3,即:

解决办法: 在lang后添加版本号3,即:

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.

Comment