UsingNote

kafka

docker

docker run -d --name zookeeper -p 2181:2181 -t wurstmeister/zookeeper docker run -d --name kafka --publish 9092:9092 --link zookeeper \

--env KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 \

--env KAFKA_ADVERTISED_HOST_NAME=localhost \

--env KAFKA_ADVERTISED_PORT=9092 \

wurstmeister/kafka:latest docker exec -it kafka bashcd /opt/kafka/binpython

demo

打开两个jupyter窗口

from kafka import KafkaProducer, KafkaConsumer

from kafka.errors import kafka_errors

import traceback

import json

def producer_demo():

# 假设生产的消息为键值对(不是一定要键值对),且序列化方式为json

producer = KafkaProducer(

bootstrap_servers=['localhost:9092'],

key_serializer=lambda k: json.dumps(k).encode(),

value_serializer=lambda v: json.dumps(v).encode())

# 发送三条消息

for i in range(0, 3):

future = producer.send(

'kafka_demo',

key='count_num', # 同一个key值,会被送至同一个分区

value=str(i),

partition=1) # 向分区1发送消息

print("send {}".format(str(i)))

try:

future.get(timeout=10) # 监控是否发送成功

except kafka_errors: # 发送失败抛出kafka_errors

traceback.format_exc()

def consumer_demo():

consumer = KafkaConsumer(

'kafka_demo',

bootstrap_servers=':9092',

group_id='test'

)

for message in consumer:

print("receive, key: {}, value: {}".format(

json.loads(message.key.decode()),

json.loads(message.value.decode())

)

)producer_demo()consumer_demo()gradle

settings.gradle.kts 更换阿里云镜像源

pluginManagement {

repositories {

// 改为阿里云的镜像地址

maven { setUrl("https://maven.aliyun.com/repository/central") }

maven { setUrl("https://maven.aliyun.com/repository/jcenter") }

maven { setUrl("https://maven.aliyun.com/repository/google") }

maven { setUrl("https://maven.aliyun.com/repository/gradle-plugin") }

maven { setUrl("https://maven.aliyun.com/repository/public") }

maven { setUrl("https://jitpack.io") }

gradlePluginPortal()

google()

mavenCentral()

}

}

dependencyResolutionManagement {

repositoriesMode.set(RepositoriesMode.FAIL_ON_PROJECT_REPOS)

repositories {

// 改为阿里云的镜像地址

maven { setUrl("https://maven.aliyun.com/repository/central") }

maven { setUrl("https://maven.aliyun.com/repository/jcenter") }

maven { setUrl("https://maven.aliyun.com/repository/google") }

maven { setUrl("https://maven.aliyun.com/repository/gradle-plugin") }

maven { setUrl("https://maven.aliyun.com/repository/public") }

maven { setUrl("https://jitpack.io") }

google()

mavenCentral()

}

}

rootProject.name = "DN_Compose"

include ':app'vnc

vncserveryum

报错

使用yum update或yum groupinstall “GNOME Desktop”等yum安装命令出现Error: Package: avahi-libs-0.6.31-13.el7.x86_64 (@anaconda)系列错误

输入以下命令

yum install yum-utils #如果这条命令也出错,就忽略这条

#清除之前未完成的事务

yum-complete-transaction

yum history redo last

#清除可能存在的重复包

package-cleanup --dupes

#清除可能存在的损坏包

package-cleanup --problems

#清除重复包的老版本:

package-cleanup --cleandupes

#以上完成后,在把yum重新更新

yum clean all #清空yum

yum -y upgrade #重新更新yum

#解决!windows

netsh winsock resetfish

下载

对于 CentOS 7,请以根用户 root 运行下面命令

cd /etc/yum.repos.d/

wget https://download.opensuse.org/repositories/shells:fish:release:3/CentOS_7/shells:fish:release:3.repo

yum install fish对于 CentOS-9 Stream,请以根用户 root 运行下面命令

cd /etc/yum.repos.d/

wget https://download.opensuse.org/repositories/shells:fish:release:3/CentOS-9_Stream/shells:fish:release:3.repo

yum install fishmaven

新建项目

mvn archetype:generate镜像

<!-- 配置阿里云仓库 -->

<repositories>

<repository>

<id>aliyun-repos</id>

<url>https://maven.aliyun.com/repository/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>aliyun-repos</id>

<url>https://maven.aliyun.com/repository/public</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories><mirror>

<id>aliyunmaven</id>

<mirrorOf>*</mirrorOf>

<name>阿里云公共仓库</name>

<url>https://maven.aliyun.com/repository/public</url>

</mirror>Go

环境配置

setx GOPATH c:\Codes\goCodeUbuntu

报错

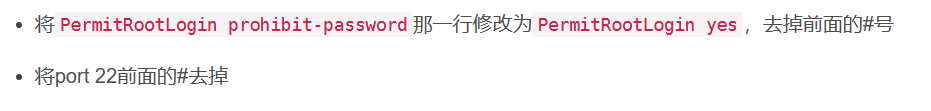

解决ssh或ftp下root用户认证失败问题

sudo passwd rootsudo apt install openssh-serversudo systemctl status sshvim /etc/ssh/sshd_config

sudo service ssh restartFlink

测试程序

stand alone模式

测试

flink run /opt/app/flink-1.14.5/examples/batch/WordCount.jar --input hdfs://hadoop201:8020/input/test.txt --output hdfs://hadoop201/output/test1.txtyarn模式

Session模式

测试

yarn-session.sh -n 2 -tm 800 -s 1 -dflink run /opt/app/flink-1.14.5/examples/batch/WordCount.jar

说明: 申请2个CPU、1600M内存

| 参数 | 解释 |

|---|---|

| -n | 表示申请2个容器,这里指的就是多少个taskmanager |

| -tm | 表示每个TaskManager的内存大小 |

| -s | 表示每个TaskManager的slots数量 |

| -d | 表示以后台程序方式运行 |

Per-Job模式

测试

flink run -m yarn-cluster -yjm 1024 -ytm 1024 /opt/app/flink-1.14.5/examples/batch/WordCount.jar | 参数 | 解释 |

|---|---|

| -m | jobmanager的地址 |

| -yjm 1024 | 指定jobmanager的内存信息 |

| -ytm 1024 | 指定taskmanager的内存信息 |

SSH

常用命令

ssh -p 10022 root@localhostssh root@22.22.22.22 -p 54321function ssh-copy-id([string]$userAtMachine, $args){

$publicKey = "$ENV:USERPROFILE" + "/.ssh/id_rsa.pub"

if (!(Test-Path "$publicKey")){

Write-Error "ERROR: failed to open ID file '$publicKey': No such file"

}

else {

& cat "$publicKey" | ssh $args $userAtMachine "umask 077; test -d .ssh || mkdir .ssh ; cat >> .ssh/authorized_keys || exit 1"

}

}ssh-copy-id root@192.168.150.129报错

git

常用命令

git pull --allgit clone https://github.com/LookSeeWatch/GardenerOS.gitgit remote add LookSeeWatch-GardenerOS https://github.com/LookSeeWatch/GardenerOS.git(输入token)

git push LookSeeWatch-GardenerOSgit commit -m "实验1"git checkout -b shiYan1git branch -D masterDocker

测试&常用命令

sudo docker run \

-d --name c7-d-1 \

--privileged=true \

-p 10022:22 -p 10080:80 \

-h c7-docker-1 \

-v /home/container/c7-d-1:/home/c7-d-1-remote \

centos:7 /usr/sbin/init| 参数: | 说明: |

|---|---|

| -d | 后台运行方式 |

| –name | 创建的容器名,方便启动、关闭、重启、删除容器等操作 |

| –privileged=true | 加上之后容器内部权限更多,不会出现权限问题 |

| -p 10022:22 -p 10080:80 | 指定端口映射,可同时放通多个端口 |

| -h c7-docker-1 | 指定容器主机名 |

| -v /home/fd/container/c7-d-1:/home/c7-d1-ys | 宿主机目录映射到容器内部目录 |

| centos:7 | 本地centos镜像版本 |

| /usr/sbin/init | 启动方式 |

sudo docker exec -it c7-d-1 /bin/bashdocker start afad86ff0343docker exec -it afad86ff0343 /bin/bashdocker cp /root/GardenerOS/bootloader afad86ff0343:/root/mnt/查看

systemctl status dockerdocker ps -adocker images删除

docker rm $(docker ps -a -q)docker system prune -a --volumesdocker rmi $(docker images -q)docker rmi -f $(docker images -q)MarkDown

任务列表

- [!] 注意

- 完成

- [?] 疑问

- ⏫ 📅 2023-03-20 🛫 2023-03-20 ⏳ 2023-03-20

MatPlotLib

报错

绘图显示缺少中文字体-RuntimeWarning: Glyph 8722 missing from current font

D:\Projects\Python\torchDemo\torch-venv\Lib\site-packages\matplotlib\mpl-data\fonts\ttfimport matplotlib

print(matplotlib.matplotlib_fname())

import matplotlib

print(matplotlib.get_cachedir())import matplotlib.pyplot as plt

import matplotlib

matplotlib.rcParams['font.family'] = 'SimHei'

matplotlib.rcParams['font.size'] = 10

matplotlib.rcParams['axes.unicode_minus']=False

''' 使用下面语句也可以

plt.rcParams['font.family'] = 'SimHei'

plt.rcParams['font.size'] = 10

plt.rcParams['axes.unicode_minus']=False

'''Android studio

openCV

javah -encoding UTF-8 com.example.opencvdemo.MainActivity报错

打不开

删除

C:\Users\30337\AppData\Roaming\Google和

C:\Users\30337\AppData\Local\Google文件夹下面的Android studio文件夹

openVino

Download a Model and Convert it into OpenVINO™ IR Format

omz_downloader --name <model_name>omz_converter --name <model_name>测试

mo -hRedis

启动

sudo service redis-server startredis-cli

127.0.0.1:6379> ping

PONGAnaconda

源

conda config --set show_channel_urls yesssl_verify: true

channels:

- defaults

show_channel_urls: true

default_channels:

- http://mirrors.aliyun.com/anaconda/pkgs/main

- http://mirrors.aliyun.com/anaconda/pkgs/r

- http://mirrors.aliyun.com/anaconda/pkgs/msys2

custom_channels:

conda-forge: http://mirrors.aliyun.com/anaconda/cloud

msys2: http://mirrors.aliyun.com/anaconda/cloud

bioconda: http://mirrors.aliyun.com/anaconda/cloud

menpo: http://mirrors.aliyun.com/anaconda/cloud

pytorch: http://mirrors.aliyun.com/anaconda/cloud

simpleitk: http://mirrors.aliyun.com/anaconda/cloudconda clean -iMongoDB

安装

启动

sudo systemctl start mongodmongosh "mongodb://localhost:27017"Linux

端口监听

netstat -ntlp防火墙

systemctl status firewalldsystemctl disable firewalld.serviceMapReduce

测试

hadoop jar /opt/app/hadoop-3.2.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.0.jar wordcount /demo/wordCount.txt /demo/output报错

/bin/bash: /bin/java: No such file or directory

解决办法

ln -s /opt/app/jdk1.8.0_361/bin/java /bin/javahbase

phoenix

python /opt/app/phoenix-hbase-2.5-5.1.3-bin/bin/sqlline.py hadoop201,hadoop202,hadoop203:2181报错

ERROR: KeeperErrorCode = NoNode for /hbase/master

解决办法

hive

JDBC连接

hive --service hiveserver2hive --service metastorebeeline -u jdbc:hive2://hadoop201:10000 -n root报错

Permission denied: user=anonymous, access=EXECUTE, inode=”/tmp/hadoop-yarn”:root:supergroup:drwxrwx—

解决办法

hdfs-site.xml

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>MySQL

报错

启动

systemctl start mysqldsystemctl enable mysqldmysql -u root -pspark

测试程序

yarn模式

测试

spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode cluster /opt/app/spark-3.2.3-bin-hadoop3.2/examples/jars/spark-examples_2.12-3.2.3.jar 10spark shell

测试

sc.parallelize(List(1,2,3,33,55)).map(e=>e*10).reduce((a,b)=>a+b)maven依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>spark.demo</groupId>

<artifactId>spark.demo</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<scala.version>2.12.8</scala.version>

</properties>

<dependencies>

<!--引入Scala依赖库-->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.12.8</version>

</dependency>

<!-- Spark各组件库================================================================== -->

<!--Spark核心库-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!--Spark SQL所需库-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!--Spark Streaming所需库-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.kafka/kafka-clients -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>3.2.1</version>

</dependency>

<!--Spark Streaming针对Kafka的依赖库-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.spark/spark-sql-kafka-0-10 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql-kafka-0-10_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!--Graphx的依赖库-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-graphx_2.12</artifactId>

<version>3.2.1</version>

</dependency>

<!-- Spark读写HBase所需库================================================================== -->

<!-- Hadoop通用API -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.3.1</version>

</dependency>

<!-- Hadoop客户端API -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.3.1</version>

</dependency>

<!-- HBase客户端API -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.4.9</version>

</dependency>

<!-- HBase针对MapReduce的API -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-mapreduce</artifactId>

<version>2.4.9</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.4.9</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.scala-tools/maven-scala-plugin -->

<dependency>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<version>2.12</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.maven.plugins/maven-eclipse-plugin -->

<dependency>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<version>2.5.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>7</source>

<target>7</target>

</configuration>

</plugin>

</plugins>

</build>

</project>pip

命令

pip install -r requirements.txt --use-pep517pip install --upgrade jupyter报错

ERROR: Can not perform a ‘–user‘ install. User site-packages are not visible in this virtualenv

python -m pip install tensorflowModuleNotFoundError: No module named ‘pip’

python -m ensurepippip.conf

pip -v config list[global]

index-url = https://mirrors.aliyun.com/pypi/simple/

[install]

trusted-host=mirrors.aliyun.compip3 config set global.extra-index-url http://mirrors.aliyun.com/pypi/simplecache

- 清除命令

pip cache purge- 缓存目录

pip cache dirC:\Users\30337\AppData\Local\pip\cache源

-i https://pypi.mirrors.ustc.edu.cn/simpleSublime

插件

CoolFormat

[

{

"caption": "CoolFormat",

"children": [

{

"caption": "CoolFormat: Quick Format",

"command": "coolformat",

"args": {

"action": "quickFormat"

}

},

{

"caption": "CoolFormat: Selected Format",

"command": "coolformat",

"args": {

"action": "selectedFormat"

}

},

{

"caption": "CoolFormat: Formatter Settings",

"command": "coolformat",

"args": {

"action": "formatterSettings"

}

}

]

}

]配置文件

{

"font_face": "Ubuntu Mono",

"font_size": 15,

"font_options": ["no_italic","gdi"],

"word_wrap": "true",

"theme": "Default.sublime-theme",

"color_scheme": "Celeste.sublime-color-scheme",

"save_on_focus_lost": true,

"ignored_packages":["Vintage"],

"auto_complete": true,

}中文字体异常

"font_face": "Source Code Pro",

"font_size": 14,

"font_options": ["gdi"]torch

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117环境测试

测试

import torch

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

print(torch.cuda.device_count())

print(torch.cuda.is_available())

print(torch.backends.cudnn.is_available())

print(torch.cuda_version)

print(torch.backends.cudnn.version())visdom

python -m visdom.server启动Dive-into-DL-PyTorch

首先进入目录,

然后,

docsify serve docs报错

pytorch安装之后不能使用cuda

安装torch版本不对

pip3 install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.htmlimport torch报错 NameError: name ‘ _ C ’ is not defined

paddle

GPU加速

import paddle

print(paddle.device.get_device())

paddle.device.set_device('gpu:0') # 把get—device的结果直接复制进去安装

pip

python -m pip install paddlepaddle-gpu==2.4.2.post117 -f https://www.paddlepaddle.org.cn/whl/windows/mkl/avx/stable.html环境测试

测试

import paddle

paddle.utils.run_check()报错

AssertionError: In PaddlePaddle 2.x, we turn on dynamic graph mode by default, and ‘data()‘ is only

import paddle

paddle.enable_static()下载

各种DLL文件下载方法

直接在网站https://www.dll-files.com/search/下载

CUDA

镜像

国内镜像

https://developer.nvidia.cn/downloads

查看CUDA版本

nvidia-sminvcc -V查看基本信息

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7\extras\demo_suite>deviceQuery.exe测试安装成功

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7\extras\demo_suiteClion配置CUDA

(13条消息) CLION配置CUDA环境_clion cuda_衍生动物的博客-CSDN博客

jupyter notebook

报错

启动闪退

ImportError: cannot import name ‘ensure_async’ from ‘jupyter_core.utils’ (D:\Anaconda\lib\site-packages\jupyter_core\utils_init_.py)

跟换版本成功启动

如下:

pip install jupyter_core==5.1.2pycharm连接报错

”ImportError: DLL load failed: 找不到指定的模块”

pip install pyzmq --force-reinstall(13条消息) 启动jupyter notebook时提示“ImportError: DLL load failed: 找不到指定的模块”_StudywithDyn的博客-CSDN博客

然后

报错显示 No module named ‘pysqlite2’

更换默认路径

jupyter notebook --generate-config打开配置文件并修改为

c.NotebookApp.notebook_dir = 'D:\\myJupyterFile'NPM

有错误说是npm版本问题,npm7在编译的时候会更加严格

我换成npm6和npm8都不行。并尝试了多个版本的npm替代包——cnpm、pnpm。

最终的解决方案是:

1.npm源换成腾讯源(taobao的地址都install不了 ,试试可以更换其他的registry源地址。)

npm config set registry http://mirrors.cloud.tencent.com/npm/2.然后这个编译命令

npm install --legacy-peer-deps3.启动

npm run serve --fix WSL

wsl --list --verbose(8条消息) Windows10访问Ubuntu子系统(WSL)的桌面环境_-_-void的博客-CSDN博客

报错

Setting up whoopsie (0.2.77)

Failed to preset unit: Transport endpoint is not connected

/usr/bin/deb-systemd-helper: error: systemctl preset failed on whoopsie.path: No such file or directory

sudo rm -rf /etc/acpi/events/usr/lib/wsl/lib/libcuda.so.1 is not a symbolic link

其实原因就是libcuda.so.1的文件软链接出了问题。因此,正确且更简单的办法是:

cd /usr/lib/wsl/lib/ # 进入报错的这个文件夹;

sudo rm -rf libcuda.so.1 libcuda.so # 删除这2个软链接的文件;

sudo ln -s libcuda.so.1.1 libcuda.so.1

sudo ln -s libcuda.so.1.1 libcuda.so # 重新建立软链接

sudo ldconfig #验证下结果出现错误 0x80070003 或错误 0x80370102

可能原因:

1.计算机BIOS未启用虚拟化;

2.VMware与WSL冲突。

解决方法:

1.BIOS启用虚拟化,具体流程可自行百度;

2.这是我遇到的问题,没有找到共存的办法,一次只能择一使用。

若要启用WSL,使用:

bcdedit /set hypervisorlaunchtype auto若要启用VMware,使用:

bcdedit /set hypervisorlaunchtype off启动

sudo service xrdp restartsudo google-chrome --no-sandboxfish_config源

下载对应版本最新的源列表:

LUG’s repo file generator (ustc.edu.cn)